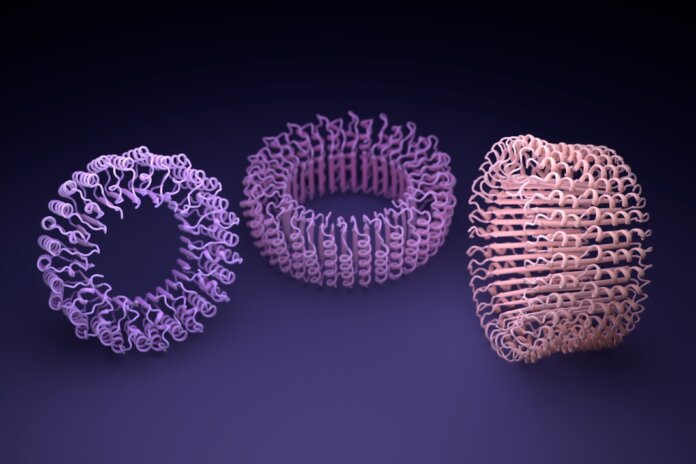

Two decades ago, engineering designer proteins was a dream.

Now, thanks to AI, custom proteins are a dime a dozen. Made-to-order proteins often have specific shapes or components that give them abilities new to nature. From longer-lasting drugs and protein-based vaccines, to greener biofuels and plastic-eating proteins, the field is rapidly becoming a transformative technology.

Custom protein design depends on deep learning techniques. With large language models—the AI behind OpenAI’s blockbuster ChatGPT—dreaming up millions of structures beyond human imagination, the library of bioactive designer proteins is set to rapidly expand.

“It’s hugely empowering,” Dr. Neil King at the University of Washington recently told Nature. “Things that were impossible a year and a half ago—now you just do it.”

Yet with great power comes great responsibility. As newly designed proteins increasingly gain traction for use in medicine and bioengineering, scientists are now wondering: What happens if these technologies are used for nefarious purposes?

A recent essay in Science highlights the need for biosecurity for designer proteins. Similar to ongoing conversations about AI safety, the authors say it’s time to consider biosecurity risks and policies so custom proteins don’t go rogue.

The essay is penned by two experts in the field. One, Dr. David Baker, the director of the Institute for Protein Design at the University of Washington, led the development of RoseTTAFold—an algorithm that cracked the half-decade problem of decoding protein structure from its amino acid sequences alone. The other, Dr. George Church at Harvard Medical School, is a pioneer in genetic engineering and synthetic biology.

They suggest synthetic proteins need barcodes embedded into each new protein’s genetic sequence. If any of the designer proteins becomes a threat—say, potentially triggering a dangerous outbreak—its barcode would make it easy to trace back to its origin.

The system basically provides “an audit trail,” the duo write.

Worlds Collide

Designer proteins are inextricably tied to AI. So are potential biosecurity policies.

Over a decade ago, Baker’s lab used software to design and build a protein dubbed Top7. Proteins are made of building blocks called amino acids, each of which is encoded inside our DNA. Like beads on a string, amino acids are then twirled and wrinkled into specific 3D shapes, which often further mesh into sophisticated architectures that support the protein’s function.

Top7 couldn’t “talk” to natural cell components—it didn’t have any biological effects. But even then, the team concluded that designing new proteins makes it possible to explore “the large regions of the protein universe not yet observed in nature.”

Enter AI. Multiple strategies recently took off to design new proteins at supersonic speeds compared to traditional lab work.

One is structure-based AI similar to image-generating tools like DALL-E. These AI systems are trained on noisy data and learn to remove the noise to find realistic protein structures. Called diffusion models, they gradually learn protein structures that are compatible with biology.

Another strategy relies on large language models. Like ChatGPT, the algorithms rapidly find connections between protein “words” and distill these connections into a sort of biological grammar. The protein strands these models generate are likely to fold into structures the body can decipher. One example is ProtGPT2, which can engineer active proteins with shapes that could lead to new properties.

Digital to Physical

These AI protein-design programs are raising alarm bells. Proteins are the building blocks of life—changes could dramatically alter how cells respond to drugs, viruses, or other pathogens.

Last year, governments around the world announced plans to oversee AI safety. The technology wasn’t positioned as a threat. Instead, the legislators cautiously fleshed out policies that ensure research follows privacy laws and bolsters the economy, public health, and national defense. Leading the charge, the European Union agreed on the AI Act to limit the technology in certain domains.

Synthetic proteins weren’t directly called out in the regulations. That’s great news for making designer proteins, which could be kneecapped by overly restrictive regulation, write Baker and Church. However, new AI legislation is in the works, with the United Nation’s advisory body on AI set to share guidelines on international regulation in the middle of this year.

Because the AI systems used to make designer proteins are highly specialized, they may still fly under regulatory radars—if the field unites in a global effort to self-regulate.

At the 2023 AI Safety Summit, which did discuss AI-enabled protein design, experts agreed documenting each new protein’s underlying DNA is key. Like their natural counterparts, designer proteins are also built from genetic code. Logging all synthetic DNA sequences in a database could make it easier to spot red flags for potentially harmful designs—for example, if a new protein has structures similar to known pathogenic ones.

Biosecurity doesn’t squash data sharing. Collaboration is critical for science, but the authors acknowledge it’s still necessary to protect trade secrets. And like in AI, some designer proteins may be potentially useful but too dangerous to share openly.

One way around this conundrum is to directly add safety measures to the process of synthesis itself. For example, the authors suggest adding a barcode—made of random DNA letters—to each new genetic sequence. To build the protein, a synthesis machine searches its DNA sequence, and only when it finds the code will it begin to build the protein.

In other words, the original designers of the protein can choose who to share the synthesis with—or whether to share it at all—while still being able to describe their results in publications.

A barcode strategy that ties making new proteins to a synthesis machine would also amp up security and deter bad actors, making it difficult to recreate potentially dangerous products.

“If a new biological threat emerges anywhere in the world, the associated DNA sequences could be traced to their origins,” the authors wrote.

It will be a tough road. Designer protein safety will depend on global support from scientists, research institutions, and governments, the authors write. However, there have been previous successes. Global groups have established safety and sharing guidelines in other controversial fields, such as stem cell research, genetic engineering, brain implants, and AI. Although not always followed—CRISPR babies are a notorious example—for the most part these international guidelines have helped move cutting-edge research forward in a safe and equitable manner.

To Baker and Church, open discussions about biosecurity will not slow the field. Rather, it can rally different sectors and engage public discussion so custom protein design can further thrive.

Image Credit: University of Washington

No comments:

Post a Comment